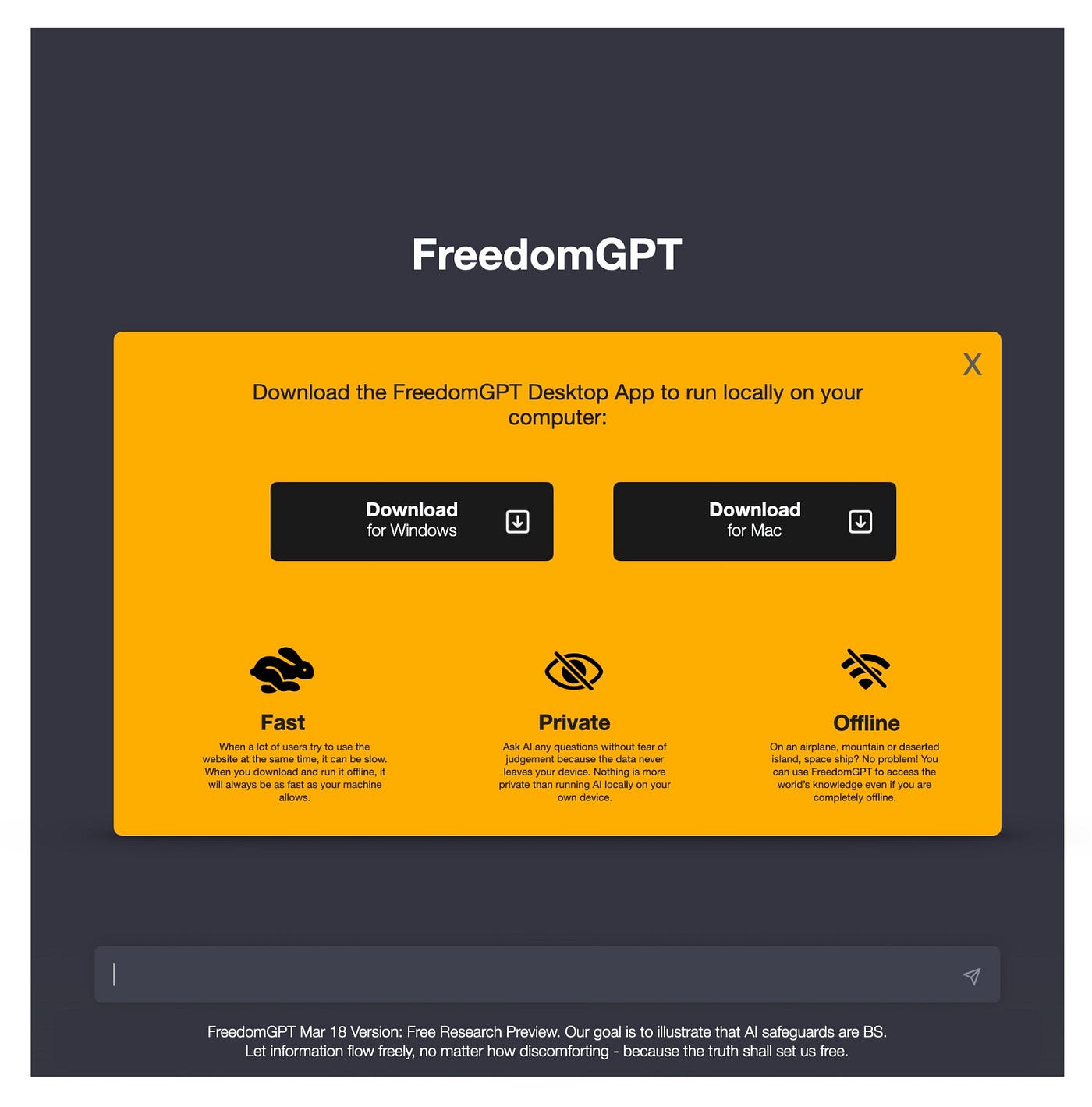

FreedomGPT, the latest AI chatbot to hit the Internet, is nearly identical to ChatGPT except for one major difference: the AI will answer any question free of censorship.

At first, that may seem like a good thing. Who wants a biased robot anyway? The program, created by Austin-based venture capital firm Age of AI, aims to be an alternative to ChatGPT. It was built on Alpaca, not OpenAI, and it’s free of the safety filters and ethical guardrails built into ChatGPT.

“Interfacing with a large language model should be like interfacing with your own brain or a close friend,” Age of AI founder John Arrow told BuzzFeed News. “If it refuses to respond to certain questions, or, even worse, gives a judgmental response, it will have a chilling effect on how or if you are willing to use it.”

AI chatbots like ChatGPT, Microsoft’s Bing and Google’s Bard will refuse to answer provocative questions about potentially controversial topics like race, politics or sexuality due to guardrails programmed by human beings.

But FreedomGPT shows what happens to AI when those ethical guardrails concerns are removed.

Journalists have discovered with only a little prompting, FreedomGPT has no limit to what it will say or do. So far, journalists have discovered the chatbot is willing to: praise Hitler, advocate for unhoused people to be shot as a solution to the homelessness crisis, spread conspiracy theories, suggest ways to kill yourself, provide cleaning tips on cleaning up a crime scene, instruct users how to make a bomb at home, use the n-word and present a list of “popular website” to download child sexual abuse videos from.

The results are troubling and even downright disturbing. Despite these responses, though, Arrow defended the chatbot, arguing that it’s no different than a human being coming up with these ideas.

“In the same manner, someone could take a pen and write inappropriate and illegal thoughts on paper,” he said. “There is no expectation for the pen to censor the writer. In all likelihood, nearly all people would be reluctant to ever use a pen if it prohibited any type of writing or monitored the writer.”

Arrow said the goal of that chatbot isn’t to be an arbitrator of truth. “Our promise is that we won’t inject bias or censorship after the [chatbot] has determined what it was already going to say regardless of how woke or not woke the answer is.”

Arrow also said that the company plans to release an open source version that will let anyone tinker with the guts of the service and transform it into whatever they want.

The program was released one week before 1,000 tech leaders signed an open letter calling for a pause on the “out-of-control race” in developing new AI technology.

“We must ask ourselves: Should we let machines flood our information channels with propaganda and untruth?” the letter writes. “Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization?”